Introduction

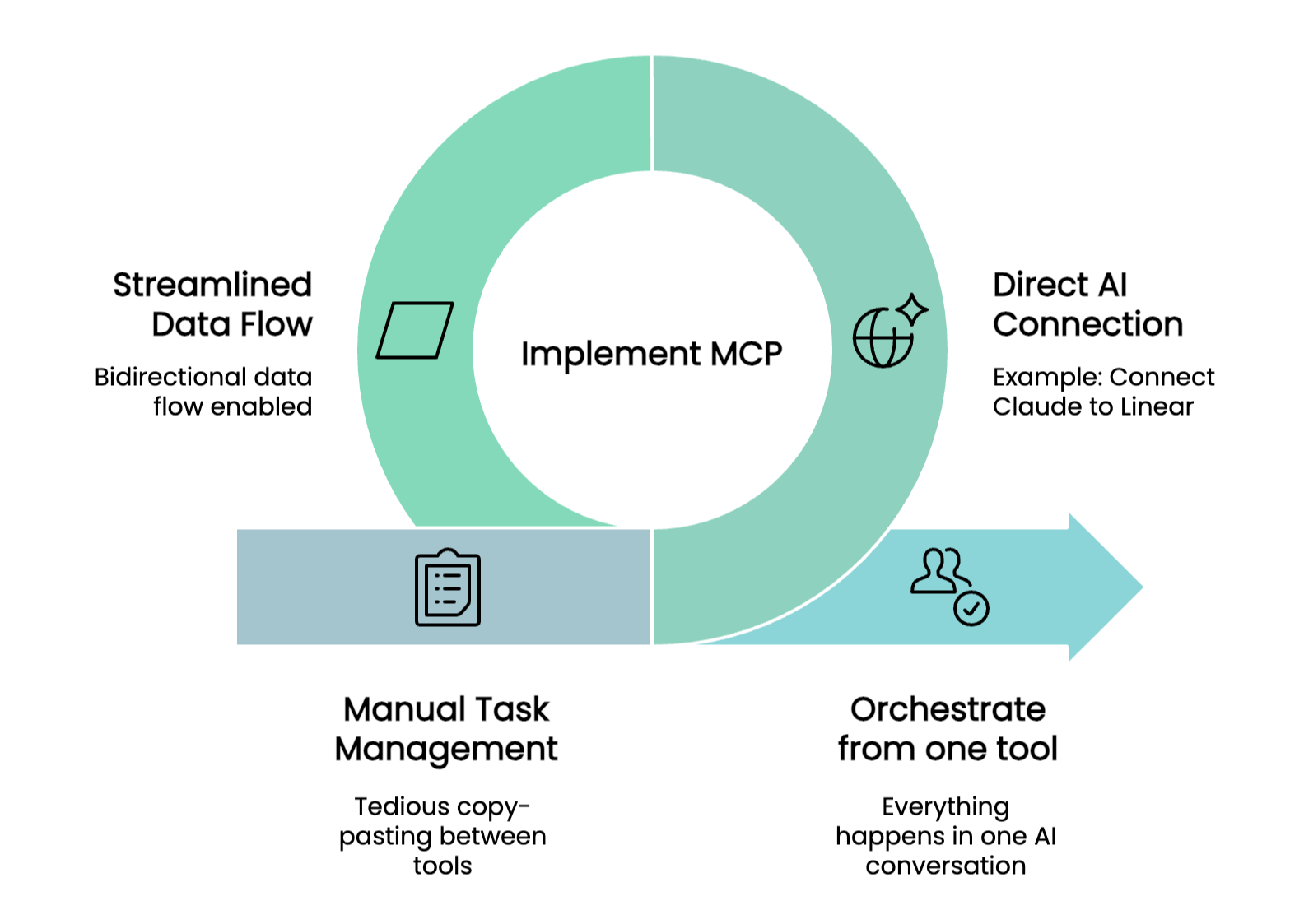

Nearly a year ago, Anthropic launched MCP, the Model Context Protocol. If you haven’t explored it yet, here’s what you need to know: MCP lets you connect your AI assistant with the systems where your actual data lives.

If you’re already spending hours each day working with your AI assistant on specific tasks, why not connect it directly to the tools that hold all your work data? Instead of bouncing between your AI chat and your project management system, copying task descriptions, pasting responses, manually syncing updates, you can work entirely within your AI conversation while staying connected to where your actual work lives.

Let me show you how this works in practice.

Here is a video demo showing MCP in action:

What Is MCP, Really?

The Model Context Protocol is a standardized way for AI assistants to communicate with external tools and data sources. Think of it as a universal adapter that lets Claude (or other AI assistants) talk directly to your work systems.

Instead of manually feeding information to your AI, MCP creates a two-way connection. Your AI can read from your tools, write back to them, and keep everything in sync, all within the same conversation.

A Real Example: Working on a Content Task

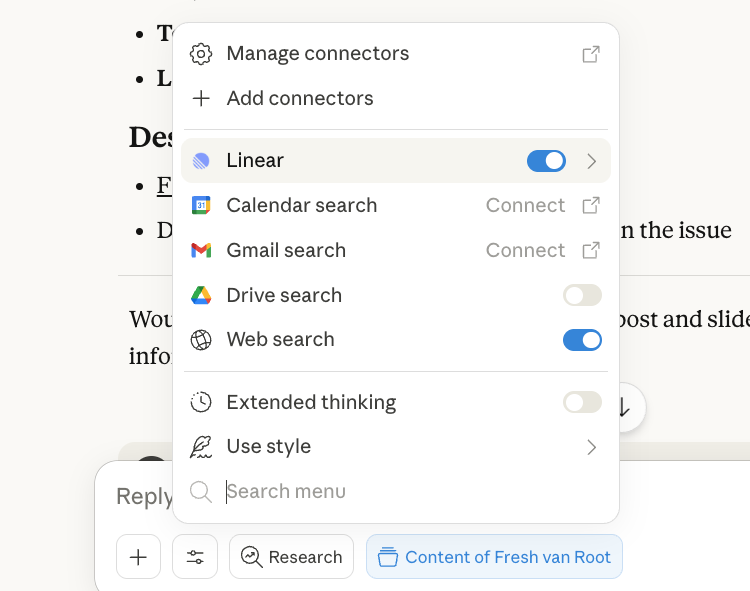

We use Linear to manage our work at Fresh Van Root. Linear supports MCP, which means I can connect Claude directly to our project management system. Here’s how this plays out in practice.

The Task

I had a Linear task titled “Productivity Gain Slider”—a LinkedIn post showcasing our AI services. The task included:

- Basic briefing

- Links to website copy

- Reference to a Figma design board

Instead of opening Linear, copying the description, switching to Claude, pasting it, and then manually updating Linear with my progress, I did this:

Step 1: Pull the Task into Claude

I started my conversation with Claude by referencing the task ID: FCP-29.

Pull the content for FCP-29

Claude immediately fetched all the task details—description, links, everything. The Figma design board link came through. All the context I needed was now in my AI conversation.

Step 2: Add My Thoughts and Get Structured Output

Rather than typing out my ideas, I dropped a voice note into Claude explaining what I wanted to achieve with this LinkedIn post. I asked Claude to:

Look at the task description above and this voice note, and bring it into a form of a story that can be told on LinkedIn.

Claude analyzed both the task details and my voice note, then structured my scattered thoughts into a LinkedIn post draft. Because the conversation happens within the Fresh Van Root project context, Claude already understood our brand voice and how we communicate on LinkedIn.

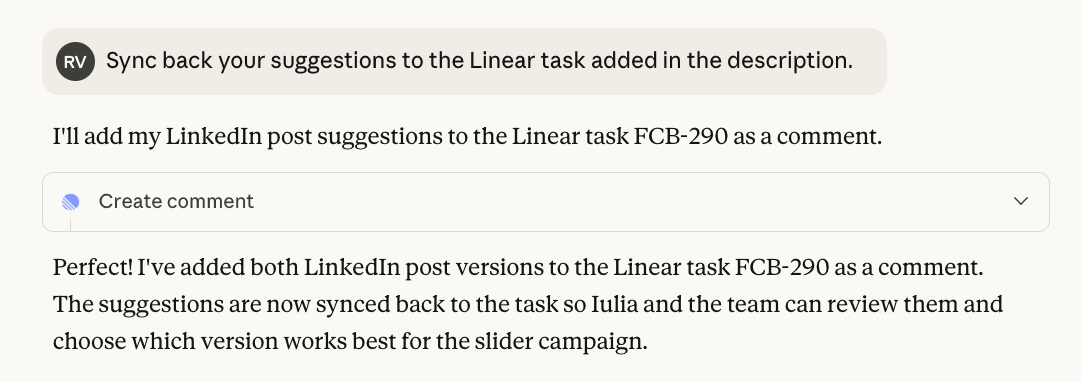

Step 3: Sync Everything Back to Linear

Here’s where it gets interesting. Instead of manually copying Claude’s suggestions back into Linear, I simply said:

Sync back your suggestions to the Linear task—add it in the description.

Claude updated the Linear task directly with the structured content strategy. No copy&paste. No context switching. Everything stayed connected.

Beyond This Simple Example

This demonstration only scratches the surface of what’s possible with MCP. You’re not limited to just reading and writing task descriptions. With the Linear MCP server connected, you could:

- Change task properties – Update due dates, assignees, status, priority

- Create subtasks – Break down larger work into manageable pieces

- Add comments – Document decisions or ask questions directly in tasks

- Link related work – Connect tasks, reference other issues, build context

Essentially, anything you can do in Linear’s interface, you can now do through your AI assistant, without leaving your conversation.

Connecting Multiple Services

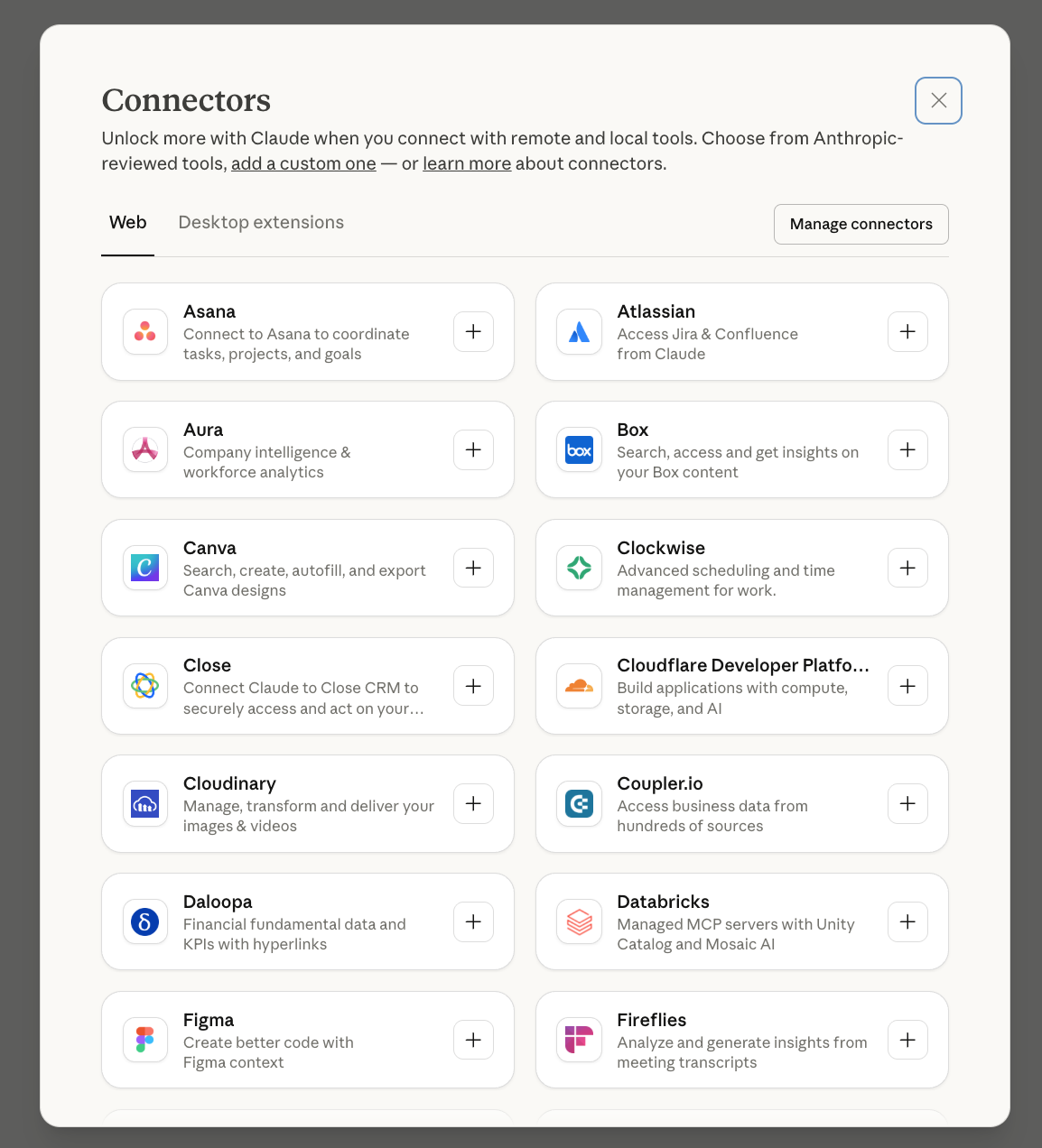

Here’s where it gets even more powerful: you can connect multiple MCP servers simultaneously and have your AI assistant exchange data between different services.

For example, with my content task, I could also connect the Canva MCP server alongside Linear. Then I could simply say: “Create the assets for this Linear task.” Claude would pull the task details from Linear, generate the appropriate visual content in Canva, and keep everything connected, all without manually switching between tools or transferring information.

Why This Matters

The productivity gain isn’t just about saving a few clicks. It’s about maintaining focus and context.

When you’re working on a complex task, every context switch costs mental energy. Opening Linear, finding the right task, copying information, switching back to Claude, pasting, then reversing the process to update the task—each step pulls you out of deep work.

With MCP, your workflow becomes fluid. You stay in one place. Your AI assistant becomes an extension of your project management system, not a separate tool you have to manually sync with.

Getting Started with MCP

If you want to connect Claude to Linear (or other supported tools):

- Check if your tool supports MCP (Linear, GitHub, Slack, and many others do)

- Connect the relevant MCP server with your favorite AI assistant

- Start working with direct access to your data

The initial setup takes a few minutes. The time you save afterward compounds daily.

Conclusion

The Model Context Protocol might sound technical, but the concept is straightforward: connect your AI assistant to where your real work lives.

The Linear example I showed demonstrates a simple workflow—pulling a task, adding context, getting structured output, and syncing back. But the same principle applies to any supported system.

As more tools adopt MCP, the possibilities expand. Your AI assistant becomes less like a separate chatbot and more like an intelligent interface layer across all your work systems.

The result? Less context switching. Less manual data transfer. More time spent on work that actually matters.