We’ve tested plenty of AI image tools over the past year, but few have promised what Google Gemini just released. Gemini 2.5 Flash Image, better known in the community as Nano Banana, is Google DeepMind’s newest image model.

It’s designed to merge the best of both worlds:

- powerful generation

- precise editing

It promises more control, better visual quality, and smarter reasoning than anything we’ve seen so far. For creators, that means faster edits, cleaner compositions, and visuals that can hold up in real production workflows.

We wanted to see if that reputation holds up.

So we tested it in our own process using Fresh van Root office photos, team portraits, and creative prompts we’d typically create for blog visuals. Here’s what we found.

What is Nano Banana?

Nano Banana is the name for Google’s Gemini 2.5 Flash Image model. It powers image generation and editing in the Gemini app, AI Studio, and Vertex AI.

The model supposedly allows precise visual changes without re-rendering entire scenes. You can recolor a wall, swap an object, or blend multiple photos while keeping surroundings intact.

One thing to keep in mind is that now every image created includes a visible watermark and an invisible SynthID signature for traceability. This is a move toward more responsible use, though it adds an extra cleanup step for anyone creating visuals commercially.

How it performs:

When we started experimenting with the tool, we wanted to see how it fits into a real creator workflow. We tested it with our own office photos, combining shots, and trying prompts we would actually use in production.

Color and object edits

We began with a photo from our Fresh van Root shared space and prompted: “Replace the wooden table with a glass and chrome mid-century table.”

The edit came out clean.

The reflections and shadows stayed accurate, and the room’s layout remained intact. That already sets it apart from older AI models that often blur details or distort backgrounds.

Next, we asked it to recolor the furniture.

The model understood textures well but only changed one chair at first. After clarifying that all chairs should be recolored, it fixed the rest easily.

We pushed it further by asking Gemini to expand the space into a small working library.

The edit held up pretty well visually and could easily pass as a real photo of our office!

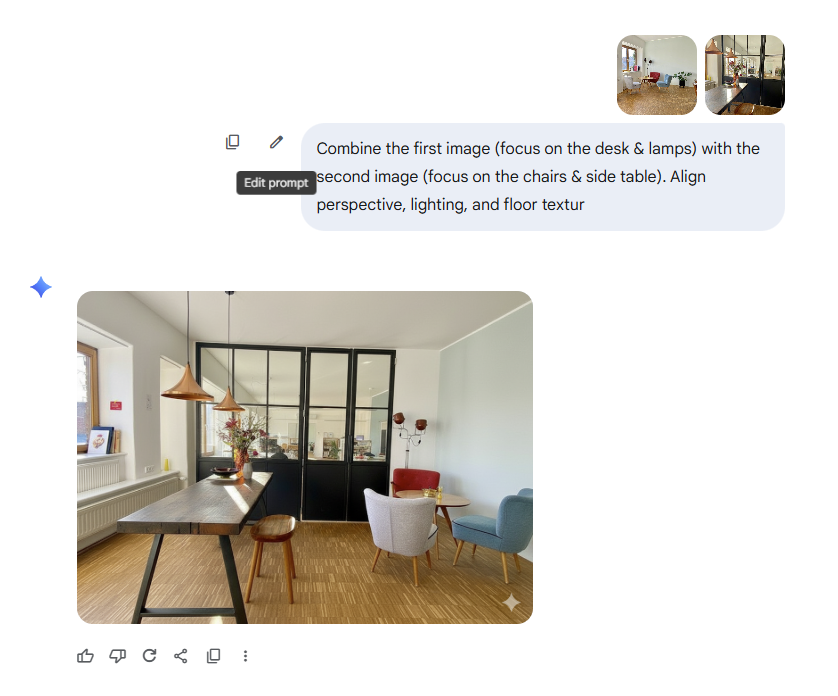

Combining Images

We wanted to know how well it performs when merging multiple photos. We used two pictures from the same space, one showing the meeting table and another showing the lounge area, and asked it to create a connected open workspace.

Gemini aligned the floor patterns, window light, and overall perspective correctly. The combined image looked believable at first glance.

But when we tried something more technical, like replacing the screen content on a monitor with our new AI Services page from our website, things went off track.

Things became more complicated when we tried smaller, technical edits. We asked Gemini to replace the screenshot of a Figma file displayed on a computer screen with our AI Services page from the website. The first result cropped the screen incorrectly and added the page as a second image.

After several refinements, the model updated the display, but it also expanded the frame and introduced new visual details we hadn’t requested.

For broader edits, Nano Banana performs impressively well.

But for smaller, context-specific changes, precision is still hit or miss.

We’ve seen similar results in other tools too, as covered in our post on AI image generators for creators, where quality often depends on how well the model understands structure and light.

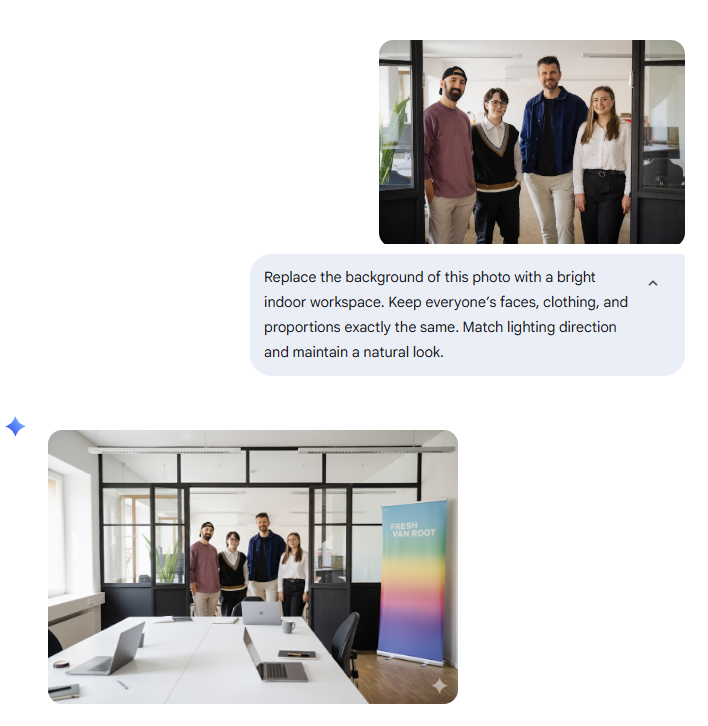

Working with real people

Now we want to see how the AI handles real people instead of objects. This is where many image generators still fall short, especially when it comes to keeping faces, proportions, and details consistent.

The first test worked surprisingly well from a distance.

The model expanded the office background naturally, adding props like office supplies and even a branded banner.

But zooming in told a different story.

The face proportions shifted slightly, and some features lost definition. These small inconsistencies made the image feel less like a realistic edit and more like a reimagined version of the original photo.

We tried again with a more descriptive prompt to see how it performs when given detailed context.

“Imagine this same team standing together in a new, modern workspace filled with plants, wooden textures, and daylight streaming through tall windows. Keep every person identical but make the scene feel alive as if they’re in the middle of a conversation. Maintain the same friendly, natural energy of the original photo, with consistent lighting and soft shadows across the floor.”

This version looked closer to something you could actually use. The lighting and mood were well balanced, and the body language between the people felt natural. Yet one team member was missing entirely, replaced by what looked like another plant.

The AI captured the environment beautifully, but when it came to preserving people, it still struggled with accuracy.

Generation from Scratch

Nano Banana is presented as the strongest image generator inside a chat assistant, but it still feels limited in how it interprets direction. Instead, we believe its performance will rely on the original content it creates rather than on extensive editing.

In a recent post on Napkin AI, we looked at a similar idea of turning written thoughts into quick, usable visuals. Nano Banana takes that further by combining creation and editing in one space.

To test that, we gave it complete creative freedom.

“Create a dream-like landscape of floating islands made of glass, each reflecting the sunset differently. High resolution, photoreal, 16:9.”

The difference was noticeable right away. When generating from text alone, the model held detail and resolution far better than during our edit sessions.

It produced images that were sharp, consistent, and artifact-free across multiple prompts.This is something we couldn’t say for our edited photos, which often softened or warped after a few iterations.

The next prompt aimed to test how Nano Banana handles stylization and references.

“Create a Stardew farm that replicates Minecraft gameplay.”

It understood both worlds well, capturing the pixel structure of Minecraft and the warm, cozy color palette of Stardew Valley, and merged them logically.

The result looked like an in-game screenshot that could actually be developed.

For businesses creating product visuals or quick mockups, that reliability is valuable. Although, for artists or designers, it might feel predictable.

The third test looked at how it performs in a storytelling context which could be something relevant for creators and content marketers.

“Create a short comic strip of a dog finding its way back to his owner.”

The AI managed to keep the same character across all panels, maintain spatial logic and tell a coherent story. The art style was clean and simple, a bit like an indie web comic.

We explored a similar approach in our post on creating comic strips with AI, where we focused on how narrative consistency brings generated visuals to life.

But from a production point of view, Nano Banana it’s exactly what you’d need to quickly visualize story ideas or educational content.

These three prompts tested different creative goals: composition, style, and storytelling. Nano Banana handled all of them with clarity and precision. It performs best when generating its own images, where the quality and stability stand out.

The trade-off is that it rarely goes beyond what’s asked.

The images are clean and consistent but lack surprise. For creators, it’s a dependable tool for polished, branded visuals, not yet a space for exploration or creative risk.

Where it still struggles

When it comes to branding and design work, Nano Banana shows its limits. The more structured or iterative the project, the more it tends to lose context.

We first tried creating a logo for our Vibecoding Vienna Meetup.

The initial version worked well. It followed the concept, used clean typography, and looked aligned with the event theme. But when we started refining, things quickly fell apart.

We asked Gemini to apply Fresh van Root’s brand colors and remove the coding symbols. It understood part of the instruction but skipped the rest.

On the next try, it confirmed the feedback but delivered the same result.

The gradient never appeared, even though the model described it back correctly.

It’s one of those moments where you sense the tool reasoning through your request but losing focus along the way. Nano Banana can produce great starting points, but once you begin fine-tuning, it forgets previous instructions.

You spend more time rephrasing than refining. It’s good for quick drafts or testing visual directions, but it’s not yet reliable for brand work where consistency and precision matter.

We ran another test to see how it handles structured layouts.

This time, we asked it to create a LinkedIn slider to promote our AI Services. The first attempt produced a strong concept but stopped after four slides, ignoring the rest of the outline.

A follow-up prompt delivered the full set of seven, but the sizing was off. When we asked for 16:9 dimensions and brand colors, it generated an entirely new deck with different copy and graphics.

In practice, this means you can’t build upon what you already have. Each revision resets the project instead of continuing it. For creators who need iterative control, keeping the same layout, copy, and structure becomes a real challenge.

For now, Nano Banana feels dependable only when creating something entirely new, not when shaping content that already exists.

Conclusion

After testing Nano Banana across real projects, it feels like a clear step forward for Gemini, even if it is not fully ready for creators who rely on precision and iteration. It is fast, stable, and capable of producing high-quality visuals directly in chat, but it still struggles when the workflow demands refinement or consistency.

In our tests, it performed best when generating images from scratch. The quality and structure held up across very different styles, from cinematic landscapes to pixel worlds and short comics, and the results were sharper than anything we achieved through edits.

When it came to structured or branded work such as logos or decks, the process showed its limits. The AI sometimes lost track of earlier instructions between revisions, which made it harder to build on previous work.

That seems less like a flaw and more like a reflection of what the tool is built for. Nano Banana is positioned closer to content creation and exploration than to on-brand design systems.

This is not just our experience.

On Reddit, creators described a similar mix of excitement and frustration. One user called Nano Banana “fantastic, but the online hype is overblown,” noting that it sometimes returns the same image unchanged. Others mentioned that while it feels polished, it struggles with longer or layered prompts, often losing context halfway through.

The same pattern shows up on Google’s support forum, where users say Gemini confirms their edits but still produces identical results as if it forgets the conversation midway.

Still, Nano Banana represents a step toward something genuinely useful.

For creators working with Gemini’s own generated visuals or quick campaign drafts, it’s reliable enough to include in the process.

Nano Banana does not transform image generation, but it makes it easier and faster to create clean, usable visuals without leaving Gemini. It is not the tool that replaces your design workflow yet, but it is one that can quietly enhance it.